Polymer science advances drug-delivery mechanisms to better break down cancerous tumors The National Cancer Institute has indicated that in 2014, “it is estimated that there will be 1,665,540 new cases of all cancer sites and an estimated 585,720 people will die of this disease.” That’s why Katie Bratlie, an assistant professor of materials science and … Continue reading Changing cancer treatment

Category: Research Highlights

A new kind of solar cell

Improving the performance of organic, thin-film materials with perovskites to make solar energy more affordable and accessible With the sun providing most forms of energy, whether indirectly or directly, it’s no wonder Vikram Dalal has spent more than 40 years working on better ways to access its energy. But it’s the potential to provide the … Continue reading A new kind of solar cell

Advancing production in large-scale industries

Calling for a multidisciplinary, value-driven philosophy for systems engineering Energy. Transportation. Civil infrastructure. Aerospace. These and many other large-scale, complex industries are critical to the security and prosperity of our nation. And they are in need of some serious attention. That’s where Christina Bloebaum says she can make a difference. Bloebaum, the Dennis and Rebecca Muilenburg Professor of Aerospace … Continue reading Advancing production in large-scale industries

Revolutionizing disease prevention and treatment

Using a systems approach coupled with nanoscale technology to develop next-generation vaccines For more than a decade, Balaji Narasimhan has been determined to improve vaccine deliveryand availability, a mission that’s especially important for parts of the world where access to such life-saving, preventative medicine isn’t always practical or even possible. One project includes searching for ways to … Continue reading Revolutionizing disease prevention and treatment

Reducing the cost of wind energy

Sensing skin monitors structural health of wind turbine blades, giving insight into needed repairs An inexpensive polymer that can detect damage on large-scale surfaces could be pivotal in making wind energy a more affordable alternative energy option. The material, which is made into 3-inch square pieces, is a nanocomposite elastomeric capacitor fabricated from a dielectric … Continue reading Reducing the cost of wind energy

A smarter power grid

Cyber-physical testbed gives realistic platform for power grid security research With the nation’s security and economic vitality in his sights, Manimaran Govindarasu is setting out to make the power grid infrastructure more resilient against evolving and continuous cyberattacks. These attacks could result in anything from compromised data within utility companies to significant blackouts across the country. … Continue reading A smarter power grid

Transient electronics research featured on Fox News

Fox News featured transient electronics research being done by Reza Montazami, assistant professor in mechanical engineering. The goal of the research is to be able to trigger electronic devices to dissolve and become unusable. Access the Fox News video story below, and read more about Montazami’s research in a story by ISU News Service. Fox News – … Continue reading Transient electronics research featured on Fox News

Study results from Iowa State University broaden understanding of control engineering

Current study results on Control Engineering have been published. News originating from Ames, Iowa, by VerticalNews correspondents, says the research paper, “presents a methodology, motivated by aerospace applications, to use second-order cone programming to solve non convex optimal control problems. The non convexity arises from the presence of concave state inequality constraints and nonlinear terminal equality constraints.” The paper concluded, … Continue reading Study results from Iowa State University broaden understanding of control engineering

Levitas uses basic statics principles to solve long-standing problem in interface science formulated by Gibbs

Valery Levitas, Schafer Professor and faculty member of aerospace engineering and mechanical engineering, found a strict and simple solution to the classical problem in the interface and surface science formulated by J. W. Gibbs in the 19th century. Namely, he uncovered a way to define the position of a dividing surface. The Gibbsian view of … Continue reading Levitas uses basic statics principles to solve long-standing problem in interface science formulated by Gibbs

Iowa State, Italy Researchers Collaborate on Structural Health Monitoring

Two cultures collaborate to develop novel structural detection methods. Researchers from Iowa State University and University of Perugia (Italy) compare U.S. patented soft elastomeric surface sensors with Italian cement-based embeddable sensors. Both of these technologies are being developed as novel nanocomposite solutions to dynamic structural monitoring. The goal is to provide cost-effective solutions for locally … Continue reading Iowa State, Italy Researchers Collaborate on Structural Health Monitoring

Iowa State’s Icing Wind Tunnel Blows Cold and Hard to Study Ice on Wings, Turbine Blades

From somewhere back behind the Iowa State University Icing Research Tunnel, Rye Waldman called out to see if Hui Hu was ready for a spray of cold water. The wind tunnel was down to 10 degrees Fahrenheit. A cylindrical model was in place inside the 10-inch-by-10-inch test section. The wind was blowing through the machine … Continue reading Iowa State’s Icing Wind Tunnel Blows Cold and Hard to Study Ice on Wings, Turbine Blades

Iowa State Engineers Upgrade Pilot Plant for Better Studies of Advanced Biofuels

Lysle Whitmer, giving a quick tour of the technical upgrades to an Iowa State University biofuels pilot plant, pointed to a long series of stainless steel pipes and cylinders. They’re called cyclones, condensers and precipitators, he said, and there’s an art to getting them to work together. The machinery is all about quickly heating biomass … Continue reading Iowa State Engineers Upgrade Pilot Plant for Better Studies of Advanced Biofuels

A Search Engine for Code

Writing software is kind of like solving a puzzle,” said Kathryn Stolee, the Harpole-Pentair Assistant Professor of Software Engineering. Any programmer who has suffered long hours in search of missing code can attest to this analogy. But now, thanks to Stolee’s research and development of Satsy, a new code-specific search engine, digging up those final … Continue reading A Search Engine for Code

Research on Nanowires Helps Scientists Communicate Across the Fence

A passion for the fundamental sciences and for things that grow motivate Ludovico Cademartiri, an assistant professor in materials science and engineering, as he develops methods for making new materials using polymers and crystals at Iowa State University. In a recent study, Cademartiri led a team of scientists that researched the formation and properties of … Continue reading Research on Nanowires Helps Scientists Communicate Across the Fence

Robotic Weeding Leads to Big Labor Savings

Lie Tang’s research in field robotics offers a glimpse into the future of organic agriculture. Tang, an associate professor in the Department of Agricultural and Biosystems Engineering, develops robotics technologies for intra-row weed removal in vegetable crops. He hopes that by perfecting this technology, he can design an automated robot to lower the level of … Continue reading Robotic Weeding Leads to Big Labor Savings

Iowa State Researchers Setting Up “Dream Team” to Research, Develop Nanovaccines

Iowa State University researchers think developing nanovaccines using a “systems” approach can revolutionize the prevention and treatment of diseases. Just think, since 1980 the world has seen more new diseases than medical science knew before 1980, said Balaji Narasimhan, Iowa State’s Vlasta Klima Balloun Professor of Chemical and Biological Engineering and leader of a new project designed … Continue reading Iowa State Researchers Setting Up “Dream Team” to Research, Develop Nanovaccines

Creating Accountable Anonymity Online

Systems that allow users complete anonymity are being abused. ECpE’s Yong Guan wants to add some accountability. The World Wide Web is, in many ways, still the Wild West. Though a large portion of internet traffic is monitored and traceable, systems like the Tor Project allow users to post and share anything anonymously. Anonymous systems … Continue reading Creating Accountable Anonymity Online

Iowa State, Ames Lab Engineers Develop Real-Time 3-D Teleconferencing Technology

Nik Karpinsky quickly tapped out a few computer commands until Zeus, in all his bearded and statuesque glory, appeared in the middle of a holographic glass panel mounted to an office desk. The white statue stared back at Karpinsky. Then a hand appeared and turned the full-size head to the right and to the left. … Continue reading Iowa State, Ames Lab Engineers Develop Real-Time 3-D Teleconferencing Technology

Harnessing the Science of Light for Biosensing

It started as a passing fascination with an insect. Andrew Hillier, chair and Wilkinson Professor of Interdisciplinary Engineering of the Department of Chemical and Biological Engineering at Iowa State University, observed a brilliant purple-blue wasp on his driveway at home and wondered at its intense color. “This insect, called a steel blue cricket hunter, is … Continue reading Harnessing the Science of Light for Biosensing

Developing New Batteries for Space Exploration

Batteries have become such a modern day convenience that many times we don’t think about them until they need recharged or replaced. Even in space, batteries make life easier by advancing exploration when they are used in land rovers, astronaut equipment and energy storage devices. But creating a battery for space exploration requires some interesting … Continue reading Developing New Batteries for Space Exploration

Iowa State Turns on ‘Cyence,’ the Most Powerful Computer Ever on Campus

The most powerful computer ever on the Iowa State University campus – a machine dubbed “Cyence” that’s capable of 183.043 trillion calculations per second with total memory of 38.4 trillion bytes – is just beginning to produce data for 17 research projects. The thinking and infrastructure behind the new machine will have much broader effects … Continue reading Iowa State Turns on ‘Cyence,’ the Most Powerful Computer Ever on Campus

Iowa State Engineers Develop New Tests to Cool Turbine Blades, Improve Engines

Engineers know that gas turbine engines for aircraft and power plants are more efficient and burn less fuel when they run at temperatures high enough to melt metal. But how to raise temperatures and efficiencies without damaging engine parts and pieces? Iowa State University’s Hui Hu and Blake Johnson, working away in a tight corner … Continue reading Iowa State Engineers Develop New Tests to Cool Turbine Blades, Improve Engines

Iowa State Photobioreactor Research Could Speed Biofuels Development

Photobioreactors, the production systems used to grow algae, seem to operate on a simple concept: place photosynthetic microorganisms in a liquid growth medium and add light. But Dennis Vigil, associate professor of chemical and biological engineering at Iowa State, and his research partner, Michael Olsen, professor of mechanical engineering, know that photobioreactors are much more … Continue reading Iowa State Photobioreactor Research Could Speed Biofuels Development

Changing the way engineering feels: A project to improve the accessibility of STEM fields for the visually impaired

Open an engineering textbook, and you’re sure to find charts, graphs, and complex equations. Hard enough to decipher, imagine parsing that information if you were blind or visually impaired. Conveying the detailed visual information that goes hand-in-hand with disciplines in the science, technology, engineering, and mathematics (STEM) fields isn’t necessarily impossible. But, according to Cris … Continue reading Changing the way engineering feels: A project to improve the accessibility of STEM fields for the visually impaired

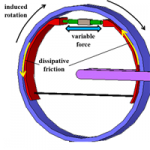

Iowa State Professor to Use Car Brake Technology to Protect Building Structures

Dr. Laflamme’s proposed semi-active damping system works much like car brake technology. Civil, construction and environmental engineering Assistant Professor Simon Laflamme will use car brake technology to ensure the structural integrity of buildings. Thanks to a recent $200,691 National Science Foundation (NSF) grant, Laflamme will integrate electronic control systems to reduce building movements due to … Continue reading Iowa State Professor to Use Car Brake Technology to Protect Building Structures

Developing Polymer Drugs for Cancer Treatment

Through research on drug delivery systems, Kaitlin Bratlie, assistant professor of materials science and engineering and chemical and biological engineering at Iowa State, is working on a way to treat cancers that don’t respond well to current treatments. Her project has been underway for just a year and is several years away from clinical trials, … Continue reading Developing Polymer Drugs for Cancer Treatment

Iowa State’s MIRAGE lab mixes real and virtual to create new research opportunities

Two armed soldiers stand behind a barrier, guarding a checkpoint in the road, watching for trouble.A white truck turns toward them.“See what he wants, guys,” says one of the guards.“Sir, we have a military-aged male jumping out of the truck. He’s going behind that van.” “Is he armed?” “No visuals, sir.” And so goes another … Continue reading Iowa State’s MIRAGE lab mixes real and virtual to create new research opportunities

“Sensing Skin” for Turbines Could Reduce the Cost of Wind Energy

A project that started out as a side experiment to monitor bridge damages has since evolved into a revolutionary, cost-saving solution in the world of wind energy.Simon Laflamme, assistant professor of civil, construction, and environmental engineering at Iowa State, began developing a damage-detecting polymer “skin” as a student at MIT.The skin, which is made into … Continue reading “Sensing Skin” for Turbines Could Reduce the Cost of Wind Energy

Iowa State Engineer looks to Dragonflies, Bats for Flight Lessons

Ever since the Wright brothers, engineers have been working to develop bigger and better flying machines that maximize lift while minimizing drag.There has always been a need to efficiently carry more people and more cargo. And so the science and engineering of getting large aircraft off the ground is very well understood.But what about flight … Continue reading Iowa State Engineer looks to Dragonflies, Bats for Flight Lessons

Grad student, professor advance soil test technology invented by emeritus professor

An Iowa State University geotechnical engineering research duo has automated a soil strength test invented by an Iowa State civil engineering professor emeritus.Jeramy Ashlock, assistant professor and Black & Veatch Building a World of Difference Faculty Fellow, and geotechnical engineering Master’s graduate Ted Bechtum have automated the Borehole Shear Test (BST), an on-site soil strength … Continue reading Grad student, professor advance soil test technology invented by emeritus professor